With the integration of wind and solar into the electric grid, power forecasts informed by Numerical Weather Predictions (NPWs) have become critical to grid operation and energy markets. The day’s notice provided by NWPs give time and flexibility for Transmission System Operators to economically anticipate changes in wind and solar plant output. Extreme forecast errors of wind and solar power are rare, yet may cost as much as multiple thousands of Euro/Mwh. Additionally, by maintenance of balancing power to correct for such forecast errors, a constant cost is exacted on everyday operation.

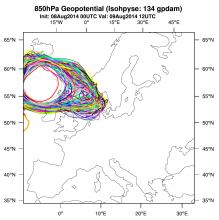

The limited predictability of weather models calls for probabilistic forecasts realized by ensembles, in which each ensemble member represents a unique model’s realization with different outcomes to give a measure of uncertainty (the figure shows the geopotential isolines of each ensemble member, where ensemble dispersion indicates forecast uncertainties). Operational weather centers generate 10s of ensemble members, which gives a good indicator of the ensemble spread. However, to resolve the tails of the probability distributions that represent such extreme error events, we are now in a position to operate 1000s of ensemble members in parallel to set up a demonstrator warning system for high impact events in the energy sector throughout the ongoing phase of the EoCoE project.

For this purpose, an efficient ultra large ensemble control system of the Weather Research and Forecasting Model (WRF) on an IBM Blue Gene HPC architecture has been developed collaboratively within EoCoE between domain scientists and HPC experts. The system is designed to efficiently realize particle filter assimilation with an unlimited ensemble size. Multiple assimilation cycles may be performed within a single application, enabling communication among numerous ensemble members. Strong parallel scaling has been demonstrated with up to 4000 ensemble members. Code adaption has been conducted to make existing model error schemes efficiently applicable, with computation time decreasing to a factor of up to ten.